Test fairness is a critical concern in all high-stakes testing programs.

The ASVAB Testing Program attempts to ensure that the ASVAB tests are fair for all examinees by adhering to the principles outlined in a number of test development guidelines:

- Standards for Educational and Psychological Testing (American Educational Research Association, American Psychological Association, and National Council on Measurement in Education)

- Code of Fair Testing Practices in Education

(Joint Committee on Testing Practices) - Principles for the Validation and Use of Personnel Selection Procedures

(Society for Industrial and Organizational Psychology, Inc.) - Guidelines for Computerized-Adaptive Test Development and Use in Education

(American Council on Education) - Guidelines for Computer-Based Testing

(Association of Test Publishers) - International Guidelines on Computer-Based and Internet Delivered Testing

(International Test Commission).

The ASVAB Testing Program also has an oversight group, called the Defense Advisory Committee on Military Personnel Testing, which is tasked with the mission of ensuring that the ASVAB program adheres to professional testing standards. Members of the Defense Advisory Committee typically are experts in testing and measurement, industrial-organizational psychology, and/or career psychology. Personnel from the ASVAB Testing Program regularly brief the Defense Advisory Committee to keep them apprised of all program activities.

Impact

The ASVAB Testing Program takes active steps during test development to uphold the following credos:

- The tests should not be biased.

- Adverse impact should be minimized.

- Test items should not be offensive to any examinee subgroups.

- Items/tests should perform similarly across racial/ethnic/gender groups.

- Test items should perform similarly across paper and computer administrations.

- Lack of exposure to testing medium or testing conditions should not adversely affect examinees’ performance.

- Scores should be comparable across administration modes.

Impact occurs when subgroups that are not matched on ability perform differentially on an item or test. Adverse impact occurs when a subgroup is disadvantaged by those performance differences. Bias occurs when an item or test unfairly favors one group over another.

The occurrence of bias is problematic, because it can negatively affect test validity. Adverse impact does not reflect bias if validity research shows that the test is equally valid for relevant subgroups (i.e., if the regression line relating the test score and a criterion is the same for each subgroup).

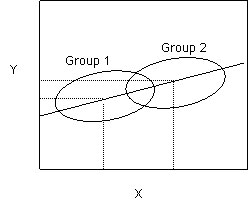

The figure illustrates such a scenario, where Group 2 performs better than Group 1 on a test [X], yet the regression line for predicting performance on the criterion measure [Y] is the same for both groups. Thus, the performance difference does not reflect bias because examinees with the same test scores will have the same predicted performance, regardless of group membership.

Four-Fifths Rule

The occurrence of adverse impact is often determined using the Four-Fifths Rule:

“A selection rate for any race, sex, or ethnic group which is less than four-fifths (or eighty percent) of the rate for the group with the highest rate will generally be regarded by the Federal enforcement agencies as evidence of adverse impact.”

— Code of Federal Regulations Pertaining to the U.S. Department of Labor

Applications of the Four-Fifths Rule to examinees that qualify for entry into the military (i.e., those generally scoring in AFQT category IIIB or higher) and to examinees that qualify for enlistment incentives (i.e., those generally scoring in AFQT category IIIA or higher) suggest some adverse impact is associated with the ASVAB. Specifically, the qualification rates for African-American/Black applicants are less than four-fifths of the qualification rates for Caucasian/White applicants, for both entry into the military and enlistment incentives. Likewise, the qualification rates for Hispanic applicants are less than four-fifths of the qualification rates for Non-Hispanic White applicants, for both entry into the military and enlistment incentives.

- Learn more about AFQT scores.

- Learn more about Enlistment Eligibility.

Although adverse impact does occur on the ASVAB, the magnitude of Male/Female, White/Black, and Non-Hispanic White/Hispanic score differences on the Mathematical Knowledge, Arithmetic Reasoning, Word Knowledge, Paragraph Comprehension, and General Science subtests are similar to the magnitude of score differences between like groups on tests of similar domains in other high-stakes test batteries. The consistency of trends in effect sizes observed across different test batteries suggests that the adverse impact associated with the ASVAB reflects a societal phenomenon, rather than bias in the test.

This conclusion is supported by a study of the sensitivity and fairness of the ASVAB technical tests (Wise, Welsh, Grafton, Foley, Earles, Sawin, & Divgi, 1992). The study compared prediction of final school grades for Air Force and Navy technical training courses and scores on the Skill Qualification Test for first-term Army recruits. Data from large samples of males, females, whites, and blacks were analyzed, and the prediction lines were similar for all groups.

- Learn more about the ASVAB subtests.

- View a copy of the report from the 1992 study.

Conducted Analysis

The ASVAB Testing Program routinely conducts analyses to ensure that items and scores are fair and unbiased.

All new items undergo a rigorous review process that includes (1) internal and external reviews of item content by experts in the domain measured by the items, (2) internal and external sensitivity reviews by trained sensitivity reviewers, and (3) reviews of statistical qualities of the items.

As part of the review of the statistical qualities of new items, differential item functioning (DIF) analyses are conducted. DIF occurs when items perform differently across groups matched on ability. DIF is a necessary, but not sufficient, condition for bias. Because all statistical procedures have some rate of false positive identification, items flagged for DIF typically undergo further review to determine if the statistical finding is supported by the item’s content.

DIF analyses are conducted for the following pairs of subgroups for which there are sufficient data to provide stable results:

- White versus Blacks

- Males versus Females

- Non-Hispanic Whites versus Hispanics

An Empirical Bayes Mantel-Haenszel procedure (EBMH) is used, and the severity of DIF is classified using the following scheme:

A = Negligible

B = Slight to moderate

C = Moderate to severe

|EBMH| < 1.0

1.0 < |EBMH| < 1.5

|EBMH| > 1.5

The EBMH procedure and the classification scheme are described further in Zwick, Thayer, & Lewis (1999).

Items flagged for B or C DIF are reviewed to ascertain whether they provide an unfair advantage for a given group. Items flagged for linguistic DIF (i.e., Non-Hispanic Whites versus Hispanics) are reviewed by linguists fluent in both English and Spanish.